Stress Testing Microservices - How API Mocks Simplify & Scale Load Tests

Performance testing is a critical phase in software development. It helps ensure that your application can handle the expected load without performance bottlenecks. However, when testing a system that depends on external services, several challenges and common mistakes can arise. In this article, we will explore these challenges, discuss common mistakes made by Software Developers in Test (SDET), and provide best practices for addressing these issues.

The Challenge: Load testing without impacting dependencies or external services

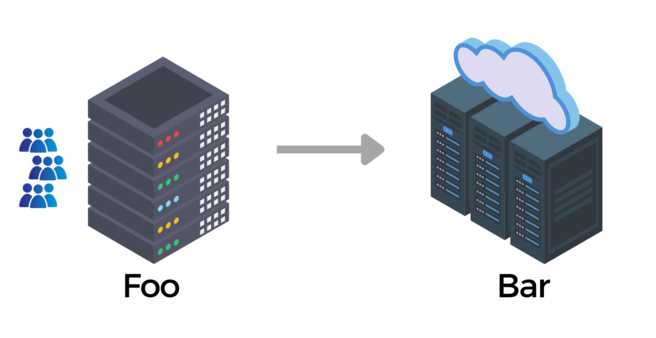

Imagine you are an SDET responsible for testing the performance of the Foo microservice. Foo relies on the Bar service for certain functionalities. Here's the challenge: how can you conduct a repeatable load test for Foo without depending on the behavior of Bar, avoiding the high cost of using the actual Bar service during testing?

Common challenges

- Scalability Issues with Bar: When Bar service is provisioned in a sandbox environment, you may have limited control over its scalability. Under heavy load, Bar service may experience higher latencies, timeouts, which can affect the accuracy of your performance test results.

- Additional Cost: One significant challenge you may encounter when conducting load testing is the added expense, especially when dealing with third-party APIs that charge per API call. This cost factor can pose a considerable obstacle to creating a cost-effective and repeatable load testing strategy.

- Creating Your Own Stub for Bar: Some SDETs opt to create their own stub or mock server for Bar. However, this approach can be time-consuming and may deviate from the original testing goal, increasing project timelines.

- Intelligent Stub Development: If Foo relies on specific data in Bar's responses, such as an entity ID, creating an intelligent stub for Bar becomes challenging and time-intensive. Additionally, testing this new stub for correctness can be complex.

The Solution: Use a hosted no-code mock server

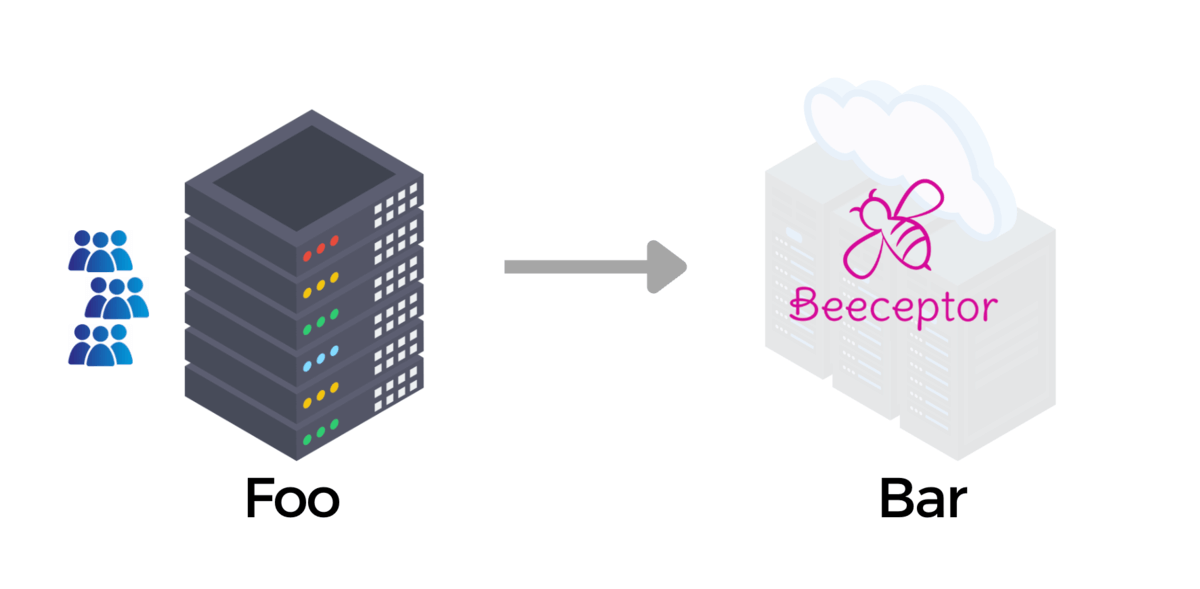

To address these challenges, consider using a mock server. A mock server replicates the behavior of the external service (Bar) and generates responses based on predefined configurations. This approach empowers QA teams to simulate various scenarios, including predictable or variable latencies of the external service, failures, and more.

Benefits of Using a No-Code Hosted Mock Server:

- Instant Provisioning: No-code mock servers can be provisioned instantly, allowing you to start testing without delay.

- Behavior Simulation: You can record the desired behaviors of the external service first and later simulate them during testing. This simplifies onboarding, as you can record and mock the behaviors you need.

- Cost-Efficiency: By using a mock server, you avoid incurring high costs associated with external service usage during load testing.

- Scalability Control: You gain control over the scalability and behavior of the mock server, ensuring consistent and reliable test results.

- Response Correctness: Mock servers make it easier to generate correct responses and validate the interactions between your system (Foo) and the external service.

The mock server like Beeceptor is a no-code and hosted solution to get you started with very basic configuration. You get full control on the behavior of the service and make your test predictable.

Best practices for load testing with mock APIs

When conducting load testing and adopting Mock APIs, it's crucial to follow guidelines to ensure accuracy, efficiency, and predictability. These guidelines can help you save time and avoid errors. Here are some key principles to keep in mind:

-

Define Clear Testing Goals: Start by precisely outlining your testing objectives and goals. Document them comprehensively. Gain a deep understanding of the technology stack supporting the use case you're testing, including the roles of microservices and external dependencies. Determine which aspects of the system you want to evaluate under load. Minimize dependencies to reduce the footprint and ensure repeatable test results.

-

Select the Appropriate Mock Server Tool: Choose a mock server tool that aligns with your project's requirements. Evaluate its capabilities in advance and model use cases as trial runs. When selecting a mock server, pay particular attention to scalability and predictable latencies. The goal is to reduce dependencies and introduce only new predictable variables into the test setup. Consider factors such as scalability, the ability to simulate various behaviors, and the capability to programmatically configure stubs or mock rules.

-

Record and Replay: Utilize a tool like Beeceptor mock server to record scenarios by intercepting HTTP traffic between your application and the service to be mocked. This simplifies payload discovery. Once mock responses are recorded, you can use them for subsequent calls, mimicking near real-world usage patterns.

-

Utilize Dynamic Responses: In cases where your application consumes data from API responses, avoid responding with pre-determined data. For example, if your application expects unique IDs, configure the mock server to provide dynamic responses with different IDs for each request. Similarly, if your application relies on specific request variables or query parameters, ensure they are part of the response.

-

Simulate Real Latencies: Introduce latency in mock server responses to realistically simulate network delays and service response times. Often, you may want to use a fixed time, like 500 ms, to emulate predictable behavior.

-

Implement Variable Load Profiles: Test under various load profiles, including steady-state loads, ramp-up and ramp-down scenarios, and peak loads, to understand system behavior under different conditions. Ensure that any variations observed are not solely caused by the new mock server component introduced into the stack.

-

Monitor and Measure Performance: Continuously monitor the performance metrics of the mock server, including latencies. Aim to minimize standard deviations in mock latencies. Measure response times, throughput, error rates, and resource utilization.

-

Simulate Failure Scenarios: Include scenarios in your testing plan where the mock server simulates service failures, timeouts, or unavailability. This helps assess how your application handles these challenging situations.

-

Integrate with Continuous Integration (CI) and Automation: Integrate mock server setups and configurations into your CI/CD pipeline to automate performance tests. This ensures frequent and consistent testing. Store mock server configurations and desired responses in source control for easy and programmatic setup. When using Beeceptor, you can use the mock rule management APIs.

-

Implement Data Validation: Introduce data validation to ensure that data sent and received by your application adheres to the expected format and content. Even if the calls to mock endpoint are passing, it doesn't mean your application shall be working fine. Before starting the load-test ensure the use-case is working as expected and the mock-data is successfully adopted by your application.

-

Validate Requests with the Mock Server: Make it easier for all team members to work with consistent test data by validating requests coming at the mock server. For example, when the load test is running, you can review all the requests and responses to the mock-endpoint on the dashboard. You can inspect the payload, review headers, status code, etc.

-

Address Security Considerations: Ensure that sensitive data and security mechanisms such as authentication and authorization are accurately simulated by the mock server.

-

Clean Up After Tests: After completing performance tests, perform thorough cleanup to remove any data or configurations created by the mock server. This maintains a clean testing environment that won't impact subsequent functional or load testing sessions.

Following these best practices will help you achieve accurate and reliable load testing results while ensuring the predictability and efficiency of your testing process.